GPUs

You can never have enough...

Nvidia’s market capitalisation increased by $184 billion in a single day this week on Monday! It is one of the largest one-day increase in market capitalisation for a company in capitalism’s history. Nvidia makes GPUs and they are selling like hot cakes right now. By the end of this article, you will thoroughly understand why GPUs are in such high demand.

A new revolution has begun - Intelligence Revolution

The time gap between revolutions is decreasing from millennia to centuries to decades to years. The Agricultural Revolution occurred 10,000 years ago. The Industrial Revolution 300 years ago. The Information Revolution 50 years ago. And now, we are at the precipice of a new kind of revolution, Intelligence Revolution.

What is the Intelligence Revolution? The Intelligence Revolution is about the exponential increase of intelligence around us and what we do with it. It’s not something in the future. It is here and now.

Intelligence is the ability to solve problems. We, humans, stand at the top of the hierarchy in the intelligence spectrum. We have built complex language, computers, cities, satellites, you name it. We’re pretty good, eh.

But, there’s a new intelligence knocking at our doors. Actually, it has been knocking for years now. This kind of intelligence resides inside of computers. So, we call it artificial intelligence.

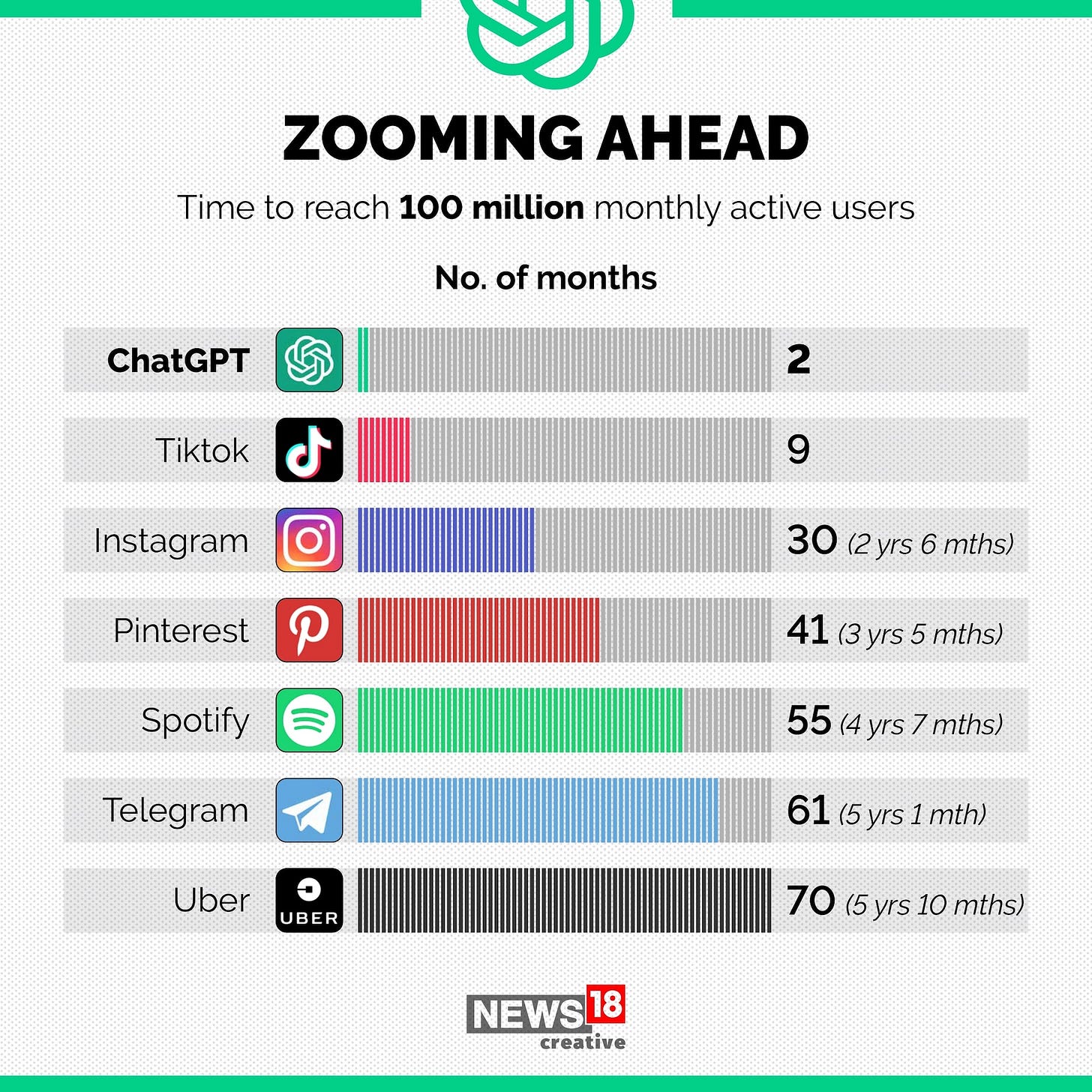

ChatGPT, an AI chatbot, went from 0 to a 100 million users in under two months. This kind of growth is unprecedented for consumer applications.

The astonishing part about ChatGPT is not its efficacy in solving one type of problem. What’s surprising is it’s ability to help in all kinds of problems from coding to writing blogs. It’s a know-it-all AI chatbot.

ChatGPT might be ‘the Iphone moment for AI’.

Till now whatever software we have built, we never gave instructions to the machine in natural language. We built special language such as Java, Python, Go to instruct the machine. Every step to solve the problem was coded into the machine by us.

Now, there’s a new layer on top of computers. With this new layer, we communicate with computers like we do with other humans, aka, via natural language such as English. This new layer is powered by a large language model trained to understand language. Few know what it takes computationally to run this layer.

Let me show you the matrix

If ChatGPT is the frontend, then, a large language model is its backend. When you send an input to ChatGPT, it passes that input to a large language model. The number of computations required for the model to produce the output is astonishing.

All of this computation is matrix multiplication and addition. That’s right. The same matrix multiplication and addition you studied in high school. GPUs are best suited to perform these calculations. But, Satpreet, how many calculations are we talking about?

A large language model such as GPT-3 contains 175 billion parameters. Think of these parameters as neurons in the brain. You input text, it passes through these parameters and voila, you get your output.

The rule of thumb is that it takes 2*n*p calculations for a model to produce output text where n is the number of tokens (think of them as words) and p is the number of parameters in the model. The computation effort required to process a 1000 token input is around 350 trillion!

That’s just for one query you asked ChatGPT. We’ve never built such computationally expensive consumer software before.

To run a consumer application like ChatGPT for 5 billion users, you need around 300,000-500,000 top of the line data center GPUs like Nvidia A100. And that’s just one application. We are going to build hundreds and thousands of such applications that use a large language model. Now, you see why GPUs are selling like hot pancakes.

Training models is just the tip of the computation iceberg

Training GPT-3 took 10^23 computations. ChatGPT will probably do more computations than that per day for 5 billion users!

It is not clear to most people that running these models in production is computationally more expensive that training them.

Most applications being built today use existing language models such as GPT-3, PaLM, etc. Some companies are training their own models for specific needs. But, a majority of software is being built by using existing models.

To be clear, training models is not going anywhere. We’ll train the existing ones too to improve them and create new ones for specific use cases. It is a continuous cycle. The main point to understand is that running these models is computationally more expensive than training them.

What’s ahead?

Will GPUs be the only hardware to perform AI computations? Google has built their own chip called TPUs (Tensor Processing Unit) for such use cases. Others tech behemoths plan to do the same.

There’s only one major supplier of data center GPUs, Nvidia, with a 71% market share monopoly. This might be the biggest bottlenecks for companies to get hands at GPUs.

One this is clear, the demand for GPUs and related hardware is only going to increase with time. The Intelligence Revolution has only begun and has a lot of tailwind. Every human endeavour will soon use artificial intelligence to increase productivity, efficacy and efficiency. The scarcest resource on this planet is intelligence and we need more of it if we are to solve the challenges that lie ahead of us. We only have 7 billion minds and they too can only increase linearly. But, with artificial intelligence that runs of GPUs, hardware is the limit.